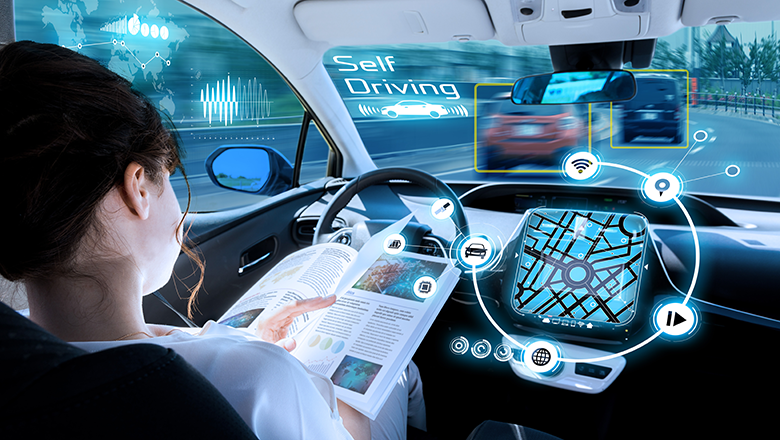

Driverless cars worse at detecting children and darker-skinned pedestrians say scientists::Researchers call for tighter regulations following major age and race-based discrepancies in AI autonomous systems.

Easy solution is to enforce a buddy system. For every black person walking alone at night must accompanied by a white person. /s

Weird question, but why does a car need to know if it’s a person or not? Like regardless of if it’s a person or a car or a pole, maybe don’t drive into it?

Is it about predicting whether it’s going to move into your path? Well can’t you just just LIDAR to detect an object moving and predict the path, why does it matter if it’s a person?

Is it about trolley probleming situations so it picks a pole instead of a person if it can’t avoid a crash?

Conant and Ashby’s good regulator theorem in cybernetics says, “Every good regulator of a system must be a model of that system.”

The AI needs an accurate model of a human to predict how humans move. Predicting the path of a human is different than predicting the path of other objects. Humans can stand totally motionless, pivot, run across the street at a red light, suddenly stop, fall over from a heart attack, be curled up or splayed out drunk, slip backwards on some ice, etc. And it would be computationally costly, inaccurate, and pointless to model non-humans in these ways.

I also think trolley problem considerations come into play, but more like normativity in general. The consequences of driving quickly amongst humans is higher than amongst human height trees. I don’t mind if a car drives at a normal speed on a tree lined street, but it should slow down on a street lined with playing children who could jump out at anytime.

Anyone who quotes Ashby et al gets an upvote from me! I’m always so excited to see cybernetic thinking in the wild.

This has been the case with pretty much every single piece of computer-vision software to ever exist…

Darker individuals blend into dark backgrounds better than lighter skinned individuals. Dark backgrounds are more common that light ones, ie; the absence of sufficient light is more common than 24/7 well-lit environments.

Obviously computer vision will struggle more with darker individuals.

If the computer vision model can’t detect edges around a human-shaped object, that’s usually a dataset issue or a sensor (data collection) issue… And it sure as hell isn’t a sensor issue because humans do the task just fine.

Maybe if we just, I dunno, funded more mass transit and made it more accessible? Hell, trains are way better at being automated than any single car.

The trains in California are trash. I’d love to see good ones, but this isn’t even a thought in the heads of those who run things.

Dreaming is nice… But reality sucks, and we need to deal with it. Self driving cars are a wonderful answer, but Tesla, is fucking it up for everyone.

Trains in California suck because of government dysfunction across all levels. At the municipal level, you can’t build shit because every city is actually an agglomeration of hundreds of tiny municipalities that all squabble with each other. At the regional level, you get NIMBYism that doesn’t want silly things like trains knocking down property values… And these people have a voice, because democracy I guess (despite there being a far larger group of people that would love to have trains). At the state level, you have complete funding mismanagement and project management malfeasance that makes projects both incredibly expensive and developed with no forethought whatsoever (Caltrain has how many at-grade crossings, again?).

This isn’t a train problem, it’s a problem with your piss-poor government. At least crime is down, right?

Ya know, I am not surprised that even self driving cars somehow ended up with the case of accidental racism and wanting to murder children. Even though this is a serious issue, it’s still kinda funny in a messed up way.